The SHSAT no longer tests only what your child knows—it tests how your child performs inside an adaptive algorithm under sustained pressure

The SHSAT has transitioned from a fixed exam to a Computer-Adaptive Test (CAT) with Technology-Enhanced Items (TEI).

That shift changes everything.

Performance is no longer judged by how many questions a student can memorize or how many practice tests they complete.

It is measured dynamically—under pressure, across changing difficulty, and against the clock.

What matters now is not just what a student knows, but how consistently they perform as conditions change.

Families routinely invest $25,000–$80,000 in SHSAT preparation and advisory services without ever training inside the actual computer-adaptive testing environment.

That’s precisely why we built the Simulator.

How Technology-Enhanced Items (TEI) Function on the SHSAT

Why Traditional SHSAT Prep Is Failing

The Ghost In The Machine

The current SHSAT uses Item Response Theory (IRT), where the algorithm dynamically adjusts question difficulty based on responses.

Traditional paper tests are linear; every question has the same weight.

The adaptive algorithm doesn’t just wait for an answer—it measures the timing and stability of every click.

The Pacing Gap

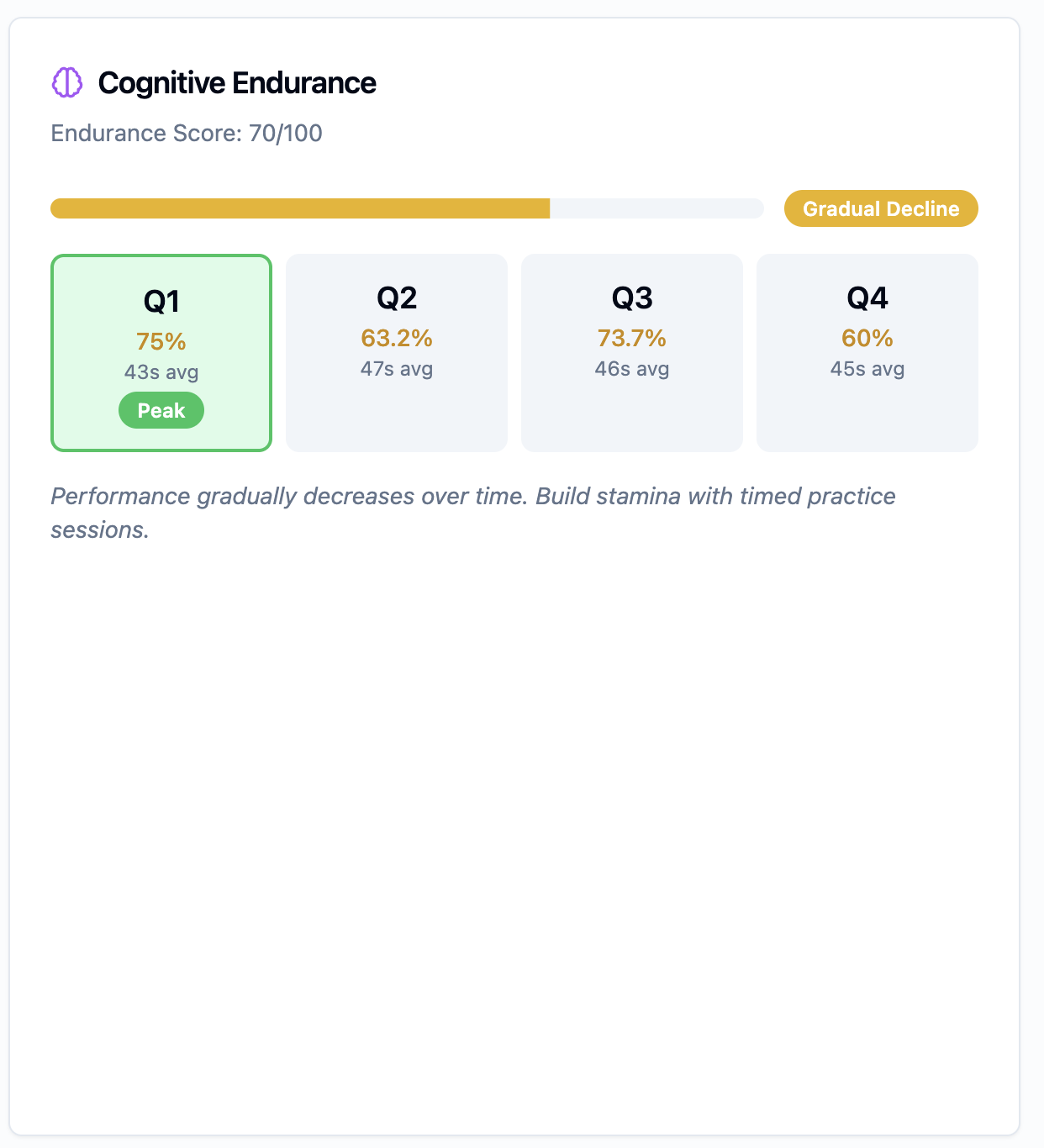

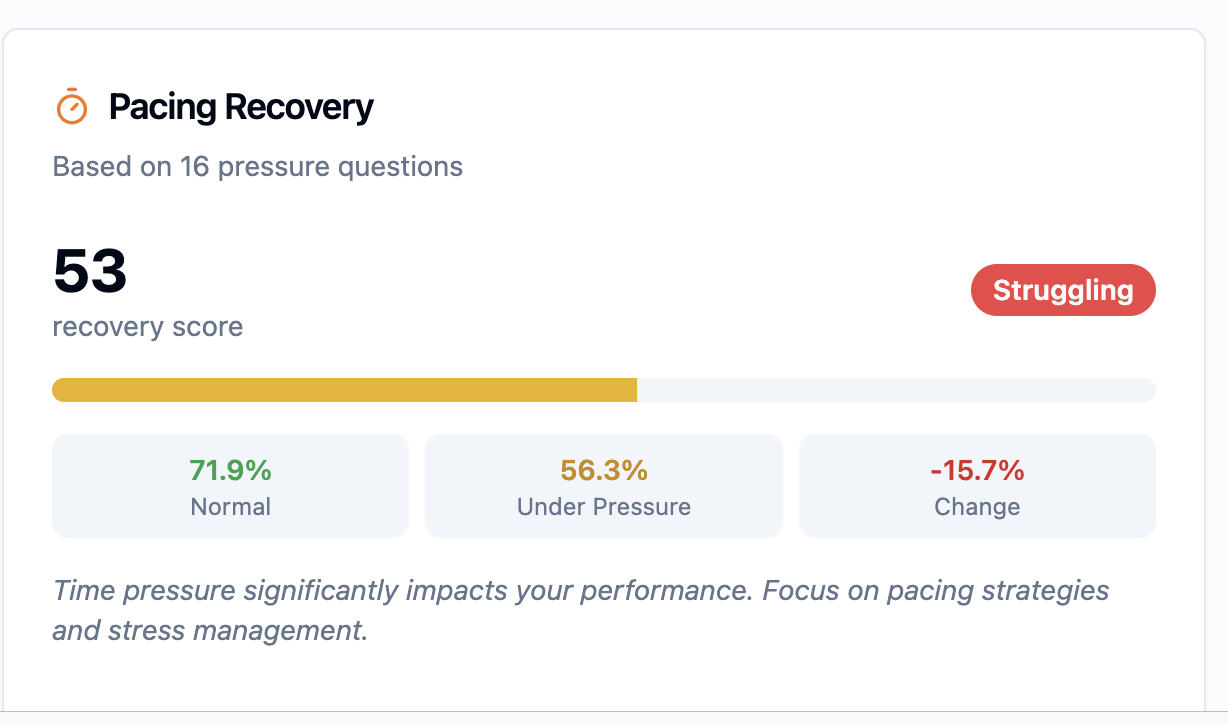

Many students experience gradual slowdowns in speed or accuracy in the final 45 minutes of a three-hour exam.

In a CAT environment, a single fatigue-related error on a seemingly “easy” question late in the test can drop a student’s percentile rank by 10–20 points.

The algorithm interprets that as lower ability, affecting the final score.

Strong students don’t struggle on the SHSAT because they lack knowledge.

They lose points because the exam now penalizes performance breakdowns that traditional preparation doesn’t measure or address:

Technology-Enhanced Items that require multi-step interaction, sequencing, and on-screen execution

Digital pacing pressure that compounds fatigue and accelerates late-section errors

Adaptive difficulty changes that shift the scoring environment in real time

Performance dips in the final section, when cognitive endurance matters most

Yet most students are still preparing with static materials that cannot observe, measure, or correct these dynamics.

The result is a dangerous mismatch:

High practice scores

Low predictive accuracy

False confidence heading into test day

Effort has not disappeared—but effort without measurement is no longer reliable.

What the SHSAT Is Actually Measuring

The modern SHSAT evaluates performance as a sequence, not a snapshot.

Specifically, it observes:

Performance under pressure

How accuracy changes as cognitive load increases.

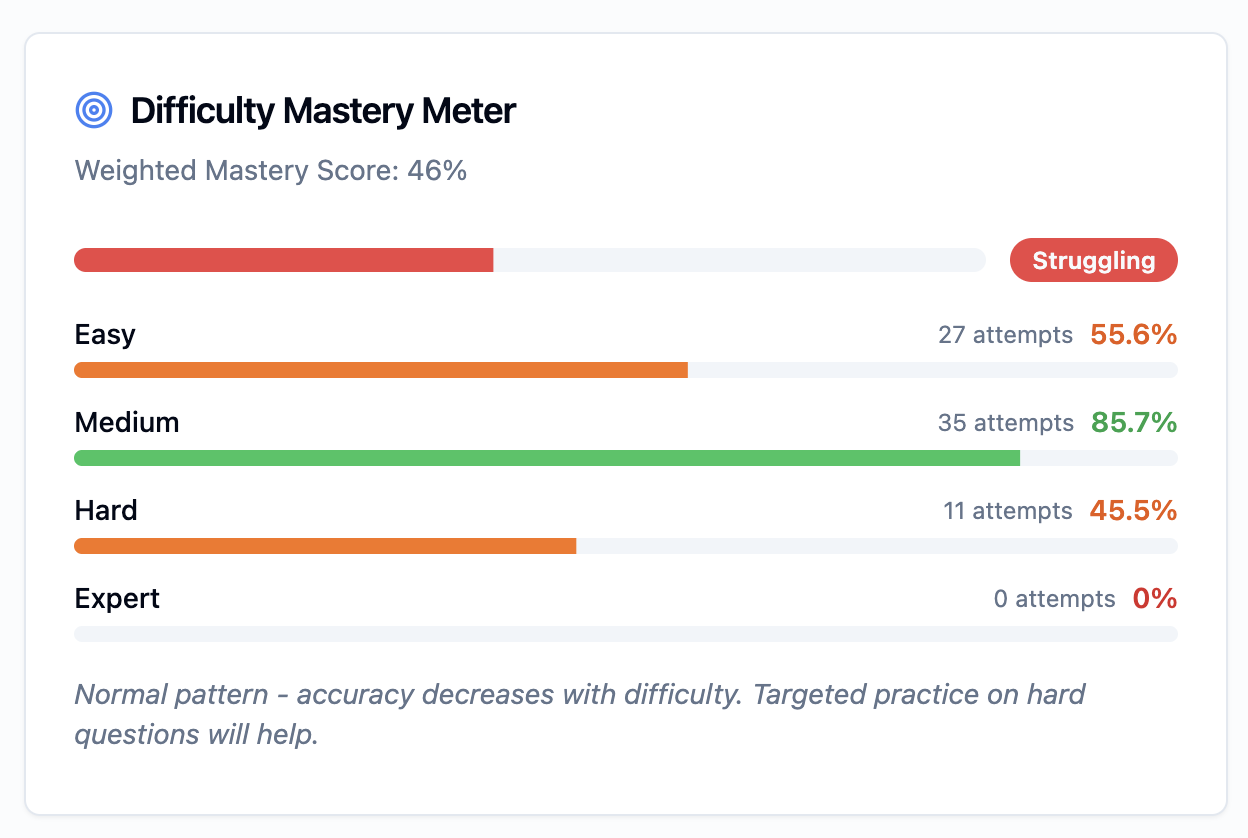

Response to shifting difficulty

How students adapt when the test pushes above or below their comfort range.

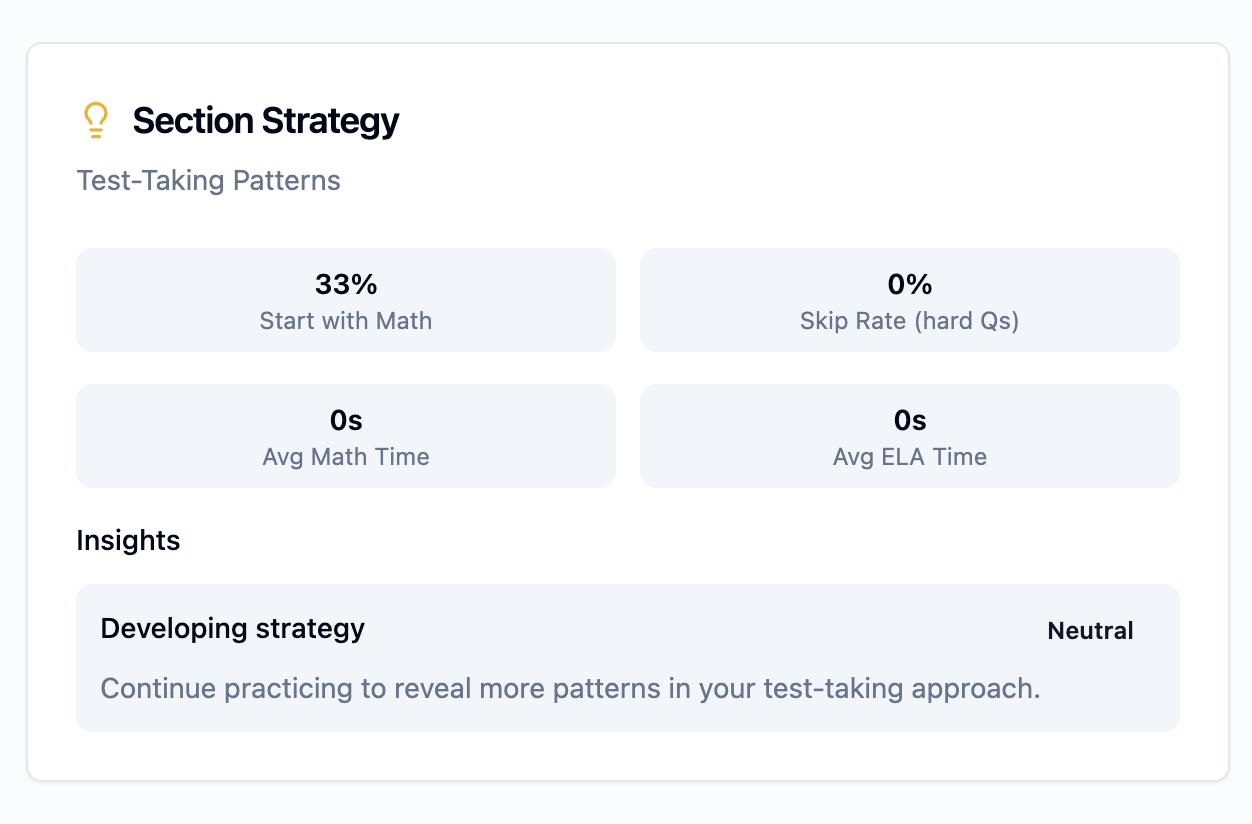

Time-constrained decision-making

How pacing choices affect downstream performance.

Consistency over duration

Where accuracy decays as mental fatigue sets in.

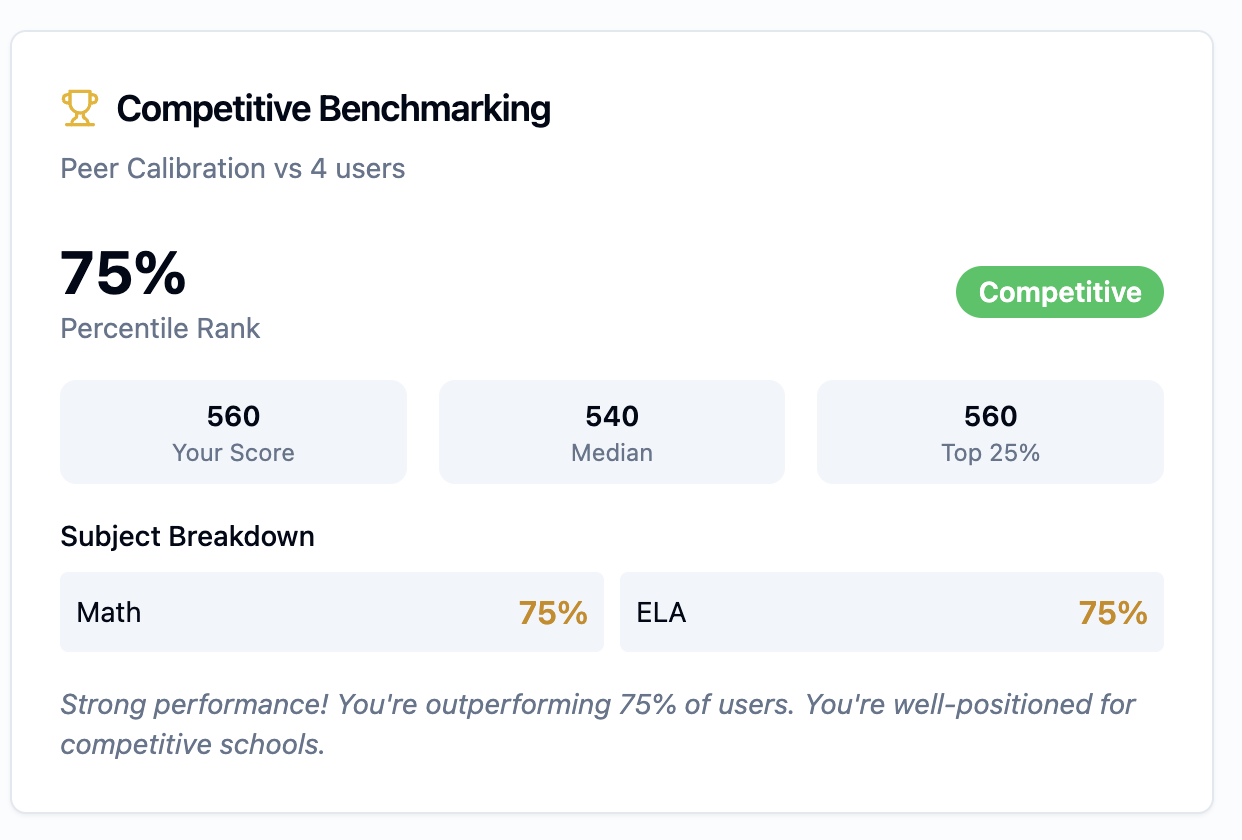

Two students with identical content knowledge can produce very different outcomes depending on how they behave inside these conditions.

The exam is not asking, “Do you know this?”

It is asking, “Can you maintain performance as the system adapts around you?”

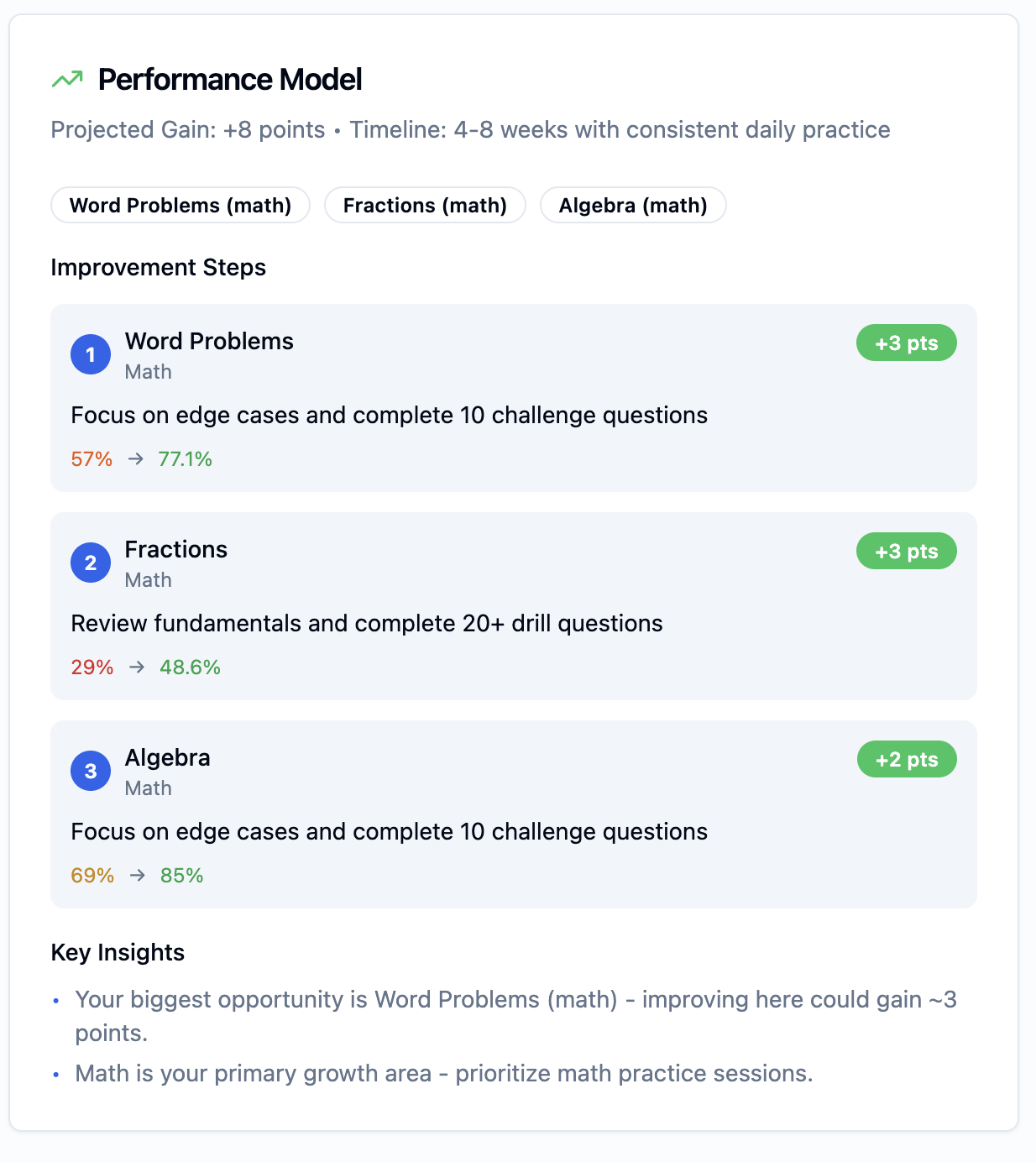

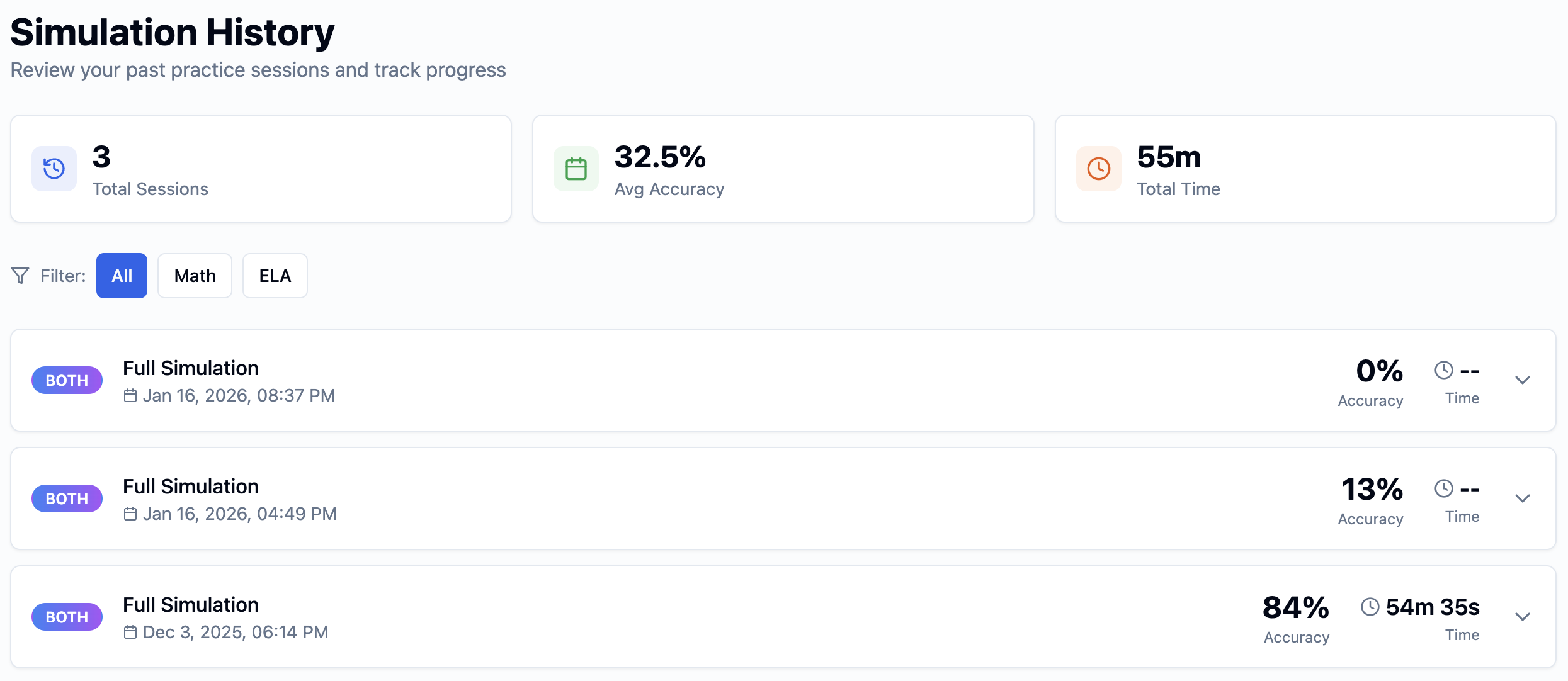

The SHSAT (CAT) Measurement Framework

To prepare for an adaptive exam, preparation itself must be adaptive.

The SHSAT (CAT) framework is built around one principle:

You cannot improve what you cannot see.

Instead of focusing on volume, this framework prioritizes:

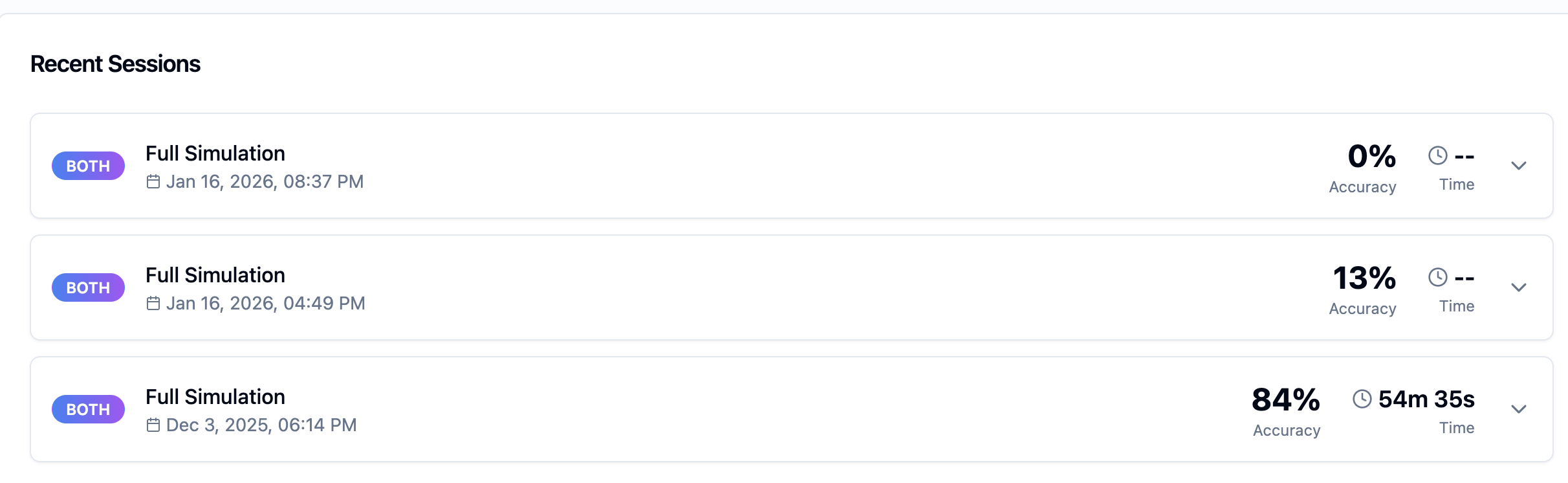

Continuous ability estimation

Real-time difficulty adjustment

Pattern detection across sessions

Longitudinal performance tracking

Preparation is treated as a measurement problem first, and a study problem second.

This allows decisions to be made based on data, not intuition.

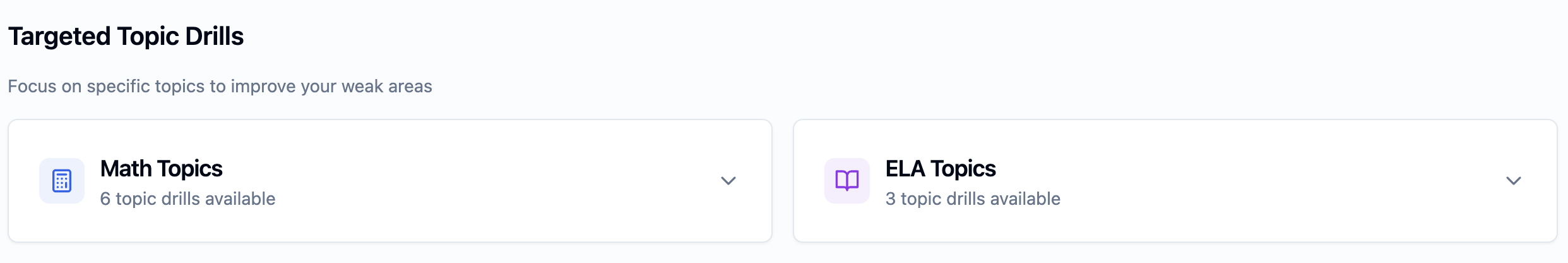

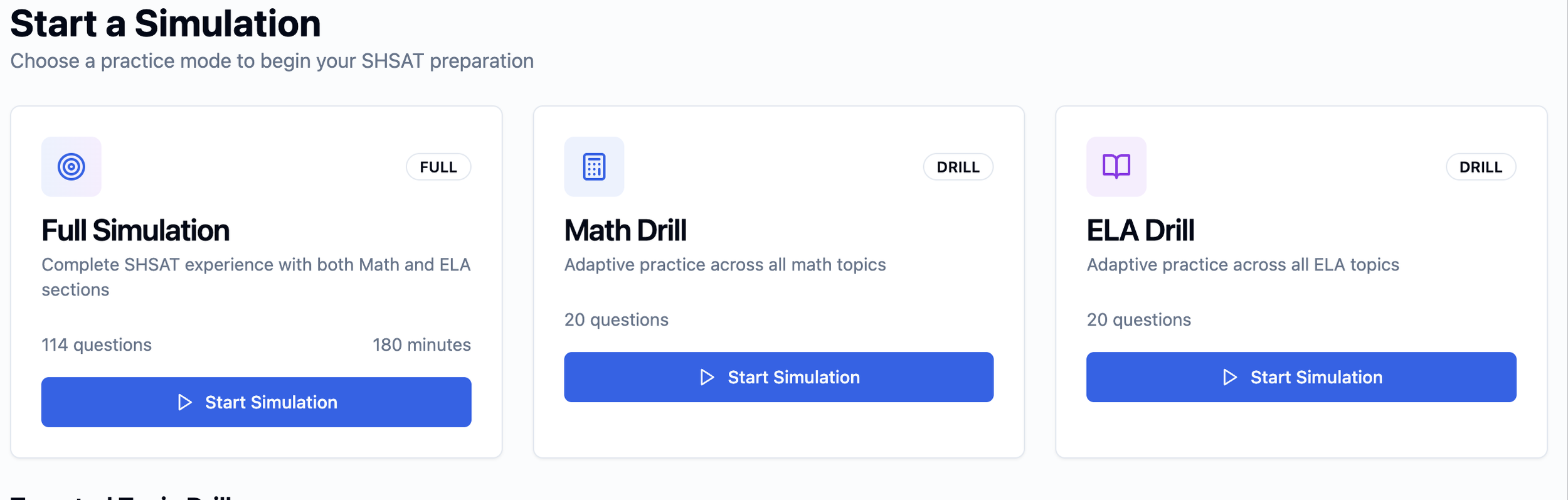

What Measurement Looks Like in Practice

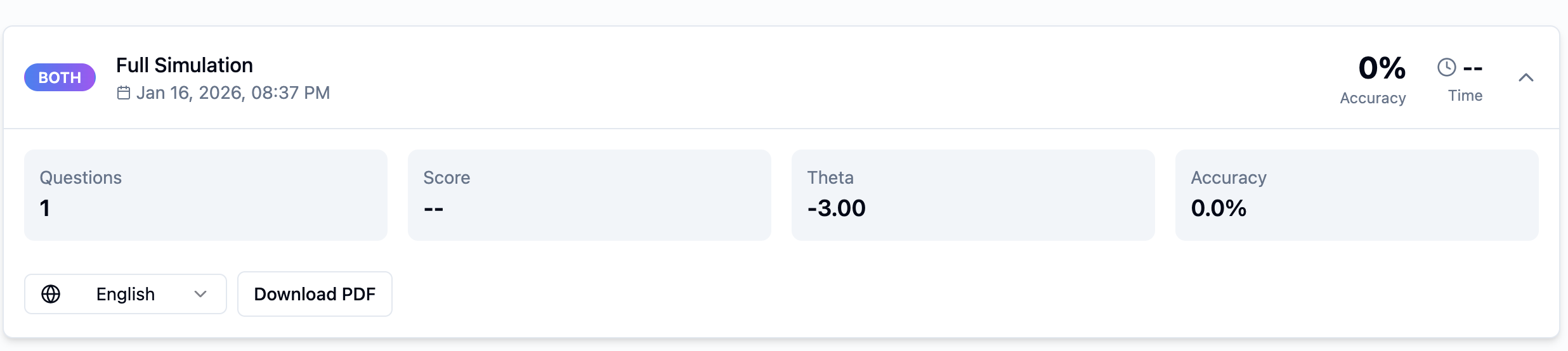

What you see here is not a practice test.

It is a live measurement environment.

Each session records:

Ability movement over time

Accuracy by difficulty band

Timing behavior at decision points

Where performance stabilizes or breaks

These dashboards do not exist to “motivate” students.

They exist to answer specific questions:

Is improvement real or volatile?

Which errors are structural versus situational?

Where does performance decay begin?

Which interventions will actually move the needle?

This is the difference between doing more and doing what matters.

Why Oversight Depth Changes Outcomes

Measurement alone is not enough.

Data must be interpreted correctly, or it becomes noise.

Different families require different levels of oversight:

Some need confirmation that progress is stable

Others need intervention before decay becomes entrenched

Some require full predictive modeling and endurance analysis

This is why oversight is structured in levels.

More oversight does not mean “more features.”

It means greater visibility into why outcomes are changing.

When oversight increases, decisions become faster, clearer, and more precise.

Please Read Carefully

This program is for families who:

Want objective insight, not reassurance

Understand that high-stakes exams require measurement, not guesswork

Value data integrity over marketing promises

Prefer fewer decisions made correctly over many decisions made emotionally

This program is not for families who:

Are looking for quick fixes or shortcuts

Prefer unlimited access without structure

Want guarantees without diagnostics

Expect progress to be linear or effortless

This distinction is intentional.

Why Access Is Structured

Because this is a measurement system, capacity matters.

Accuracy degrades when systems scale without limits.

For that reason:

Access is controlled

Placement is reviewed before enrollment

Documentation exists to protect data integrity and families alike

This process is not friction.

It is quality control.

The Next Step Is Simple

If this framework aligns with how your family thinks about preparation, you may proceed to review measurement capability by tier, capacity limits, and placement options.

[ Get Started ]